As a software service provider, we at Spaceteams rarely start on a completely green field. Although it happens occasionally, it is far more common that an existing solution needs to be optimised, expanded, or scaled.

To gain a solid understanding of the scope, challenges and opportunities ahead of us, every project begins with a discovery phase. When a tool or solution already exists, the evaluation of that tool or solution is a an essential part of the discovery phase.

In this article, we will:

- Provide you with an overview of our general approach to evaluating existing software

- Explain in more detail what we focus on during the technical review

- Highlight the benefits of having an independent, external expert assess your software

Our general approach

Step 1: Talk to the experts

We begin by arranging a meeting, or for larger systems a series of meetings or a workshop, where our client walks us through the software. This covers the intended business goals, the user interface, central processes, and any known limitations.

This is never a one-directional presentation. We ask questions throughout and aim to build a holistic understanding of:

- The system’s purpose and user experience

- The development workflow

- Deployment and release processes

- Known issues and constraints

Before moving on, we jointly define the concrete scope of the analysis:

Is the focus usability? Scalability? Security? Maintainability? (Or all of them.)

This alignment ensures we evaluate the system with the right lens.

Step 2: A deeper look

In this step, we dive into the “engine room” of the software. Depending on the setup, this may include product environments, codebases, documentation, and team processes.

Business review

Ideally, we gain access to a running production and/or staging environment. This allows us to:

- Explore the tool as a real user would

- Understand processes directly from the interface

- Assess usability and clarity

- Validate if the workflows match the intended business logic

We also review available documentation (if any). Interestingly, outdated or missing documentation is one of the most common findings during these evaluations.

Technical review

For the technical review, we request access to the codebase and supporting systems. We investigate questions such as:

- How maintainable and modular is the code?

- Are there structural or architectural bottlenecks?

- Do we identify security vulnerabilities?

- Is the technology stack modern, appropriate, and scalable?

We also review tools like Jira or other task boards to understand bug history, incident patterns, and existing technical debt.

(We explore this step further in the dedicated “Technical review” section below.)

Process review

Software quality is tightly linked to team processes. We therefore examine:

- Collaboration methods

- Task and release workflows

- Deployment and CI/CD structures

- Communication between stakeholders and developers

This gives us valuable context about how the software has been built and maintained so far.

Step 3: Synthesis

Evaluation is never a one-person activity at Spaceteams. We always discuss findings with at least one colleague, challenge assumptions, validate perspectives, and jointly prioritise the issues we uncover.

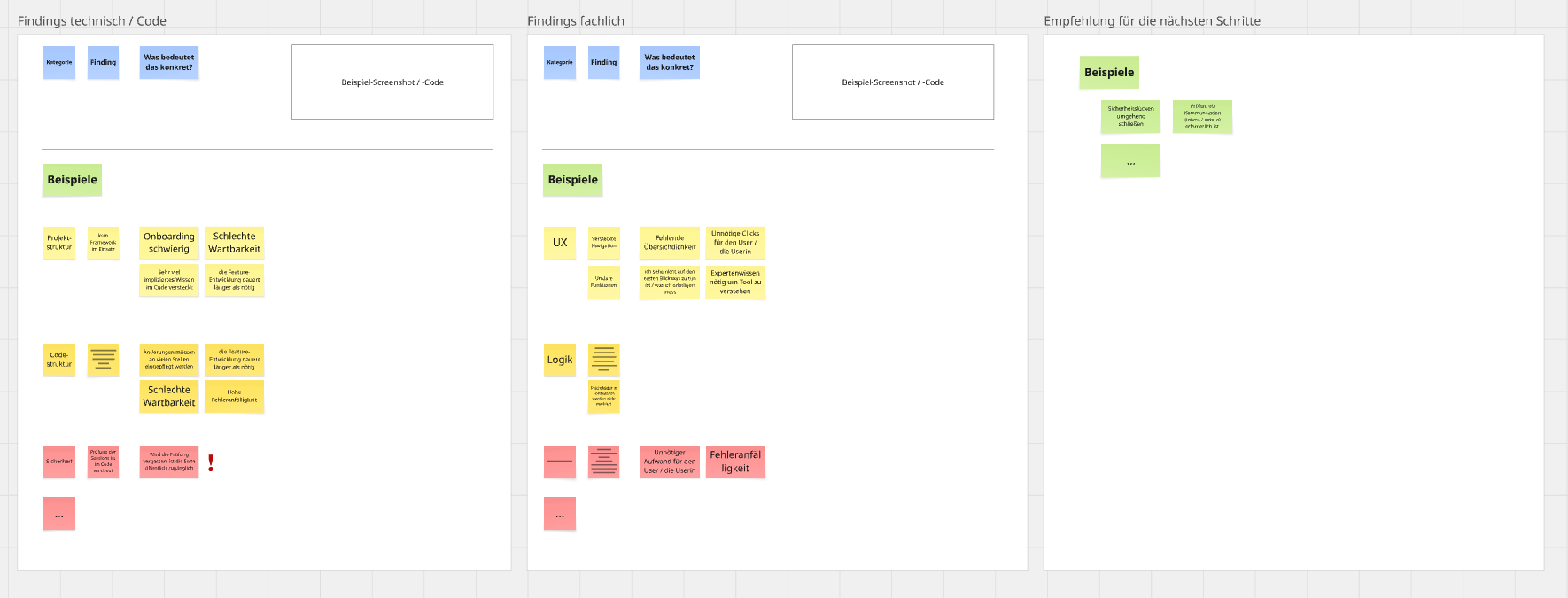

The results are then summarised for our clients with:

- Clear priorities

- Concrete examples (including code excerpts, if helpful)

- Identified risks

- Recommendations for next steps

Rather than producing a traditional PDF report, we usually document everything in a structured way using Miro — our favourite tool for visual clarity and collaboration.

Step 4: Customer presentation & discussion

Usually after one to two weeks, we meet with our client to present the findings. We walk through essential insights, show relevant examples, and openly discuss possible next steps.

Sometimes these discussions happen immediately; sometimes a separate follow-up session makes more sense — depending on the complexity and the client’s needs.

The technical review — what exactly do we do?

Before we recommend improvements or plan a redevelopment, we invest time in thoroughly understanding the software you already have. A solid technical analysis allows us to see what’s working well, where potential risks lie, and how the system can evolve in a sustainable way. This phase gives both sides clarity and aligns expectations for the rest of the project.

1. Exploring the application first-hand

We start by using the application just as an end user would. Whether it’s a customer-facing web app or an internal business tool, this step helps us understand its purpose, the main user journeys, and how the system behaves under everyday use. It’s also where we notice early indicators such as slow-loading pages, unclear flows, or inconsistencies in the interface. These first impressions guide us toward areas that may require deeper investigation later on.

2. Reviewing architecture & flow diagrams

If architecture or flow diagrams are available, we study them next. Even a simple diagram can reveal a lot about how the system is deployed and structured — whether it runs in the cloud or on-premise, how components communicate, and which external services or integrations are involved. It also helps us spot potential bottlenecks or entry points where security vulnerabilities could exist. Having this high-level context makes it much easier to interpret what we later see in the code.

3. First glance at the codebase

With that foundation in place, we take an initial pass through the code. The goal at this stage isn’t to understand every detail but to get a feeling for the chosen tech stack, the frameworks and libraries in use, and the overall architectural style. We look for things like outdated dependencies, unusual structural decisions, or early patterns that stand out — both good and bad. This first sweep often gives us a sense of the system’s maintainability and how easy (or challenging) any future changes might be.

4. Running the tests

If the project includes automated tests, they become an invaluable source of information. Tests often reveal how developers intended the system to behave, which edge cases they cared about, and how confidently changes can be made without breaking existing functionality. The presence, structure, and coverage of tests say a lot about the maturity of the project. When tests are missing or outdated, that’s equally telling.

5. Deep dives into key code areas

Once we understand the broader picture, we dive deeper into specific parts of the codebase — usually the areas that handle crucial data flows, business logic, or user interactions. We analyze how data travels through the system, how users are authenticated and authorized, and whether sensitive information is handled securely. At this stage, we often uncover issues such as hardcoded secrets, unsafe database access patterns, or modules that are so tightly coupled that they hinder future scalability. This is where the real story of the application starts to become clear.

6. Checking against OWASP vulnerabilities

Security is always a priority, so we perform a focused review for common OWASP vulnerabilities. This includes things like injection risks, weak authentication mechanisms, exposure of sensitive data, cross-site scripting issues, or outdated components that contain known security flaws. Even a lightweight pass can reveal important risks that should be addressed early in the project.

7. Documenting findings transparently

Throughout the process, we document everything in a shared Miro board. Each finding includes the context of the issue, a relevant code snippet, an explanation of why it matters, its severity, and a recommended approach to fix it. This creates a clear, transparent foundation for discussing next steps with your team and ensures we move forward with a shared understanding of the system’s current state.

Why an external review is valuable

From our perspective, external reviews are often not only valuable but simply necessary. That is especially the case, when a company uses customised software but has no internal specialists who are able to evaluate the solution. In other cases internal expertise might exist, but the specialist(s) do not have enough capacity.

If your organisation does not have internal experts who can perform a detailed software assessment, you may not be aware of risks such as:

- Architectural or structural weaknesses in your software

- Security vulnerabilities

- Outdated or tightly coupled code

- Accumulated technical debt

- Insufficient test set up

These issues often remain invisible until they become costly problems. Weaknesses in architecture and code will slow your development performance down, will cause unexpected errors and will make it more and more complicated to maintain your software. Security vulnerabilities are even a bigger problem, because they make your organisation vulnerable for external attacks.

And even in the case you have internal experts available, an external perspective is incredibly valuable: External evaluators have no emotional or political attachment to the product and can therefore provide an unbiased, objective assessment. Moreover, external evaluators might even bring fresh experience from other projects or are able to benchmark your system against industry standards.

For example, we recently helped the Berliner Stadtmission with such an unbiased “view from the outside”, analysing the technical side (structure, maintenance effort, security) as well as the organisational side (usability, processes,…). With the results the client has a comprehensive overview of where they stand and which areas can potentially be improved.

How we ensure the “external perspective” at Spaceteams

At Spaceteams, we believe in the great added value of an external perspective on our projects. As we work in multiple teams for different customers, we have set up different cross-team best practices to guarantee that external perspective in our projects:

- Architecture reviews for new projects: Our senior developers challenge proposed architectures and technology choices early in the process.

- Regular technical show-and-tell sessions: Our teams present their solutions to one another, share lessons learned, and discuss improvements.

- Weekly product sync: Our teamleads have weekly syncs with our head of engineering to (beneath other things) discuss technical challenges and chosen solutions.

Do you want know if your software systems are in good shape in terms of security, maintainability or usability? Then a status quo analysis by an external software expert is a good way to find out. Just contact us or book a free consultation to learn more!